The folks at Apple have just revealed that they’re planning to scan iPhones in the U.S. for any issues of child sexual abuse.

The idea is being heralded by child protection groups but the move does cause others to have concerns about security and how the move could lead the government to get involved and misuse the plan.

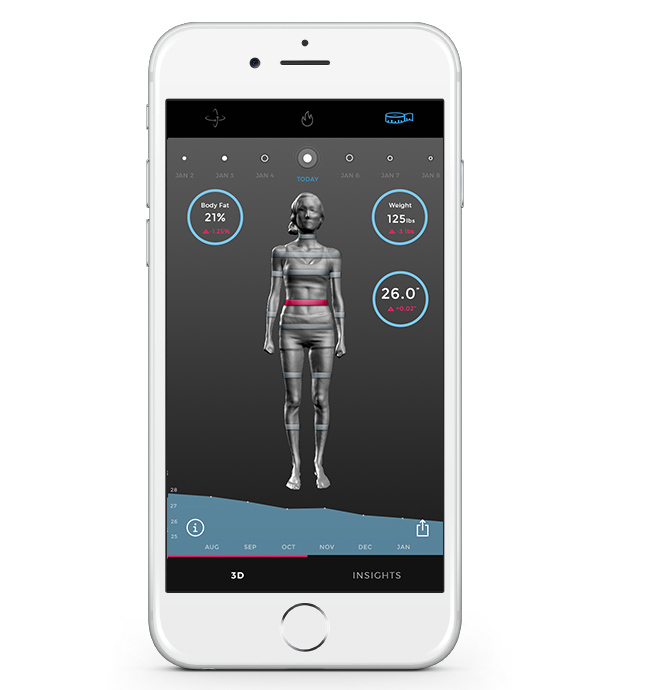

NeuralHash would be used on iPhones to detect any child pornography images on a user’s iPhone before they get uploaded to iCloud. If an image is flagged, a human will then check out the image in question, and if anything is found the person’s account will be suspended and the National Center for Missing and Exploited Children will be notified immediately.

Before you get up in arms about the cute baby bathtub pics that you like to post on da Gram, know that the system will only flag pictures that are already in the child pornography database. So continue to take those cute bubble bath pictures.

The opponents to Apple’s plan are worried that people could try and frame innocent people by sending pictures that could get them in trouble.

I should also let it be known that other tech companies like Google, Microsoft, and Facebook have been doing this same thing that Apple is just starting to do for years. Apple has been under pressure from the government for years about increased surveillance of encrypted data.

What do you think about Apple’s initiative?